How to Automate Log Parsing for Large-Scale Environments

In the world of modern, distributed systems—especially those leveraging microservices, containers, and multi-cloud architectures—logs have ballooned into an overwhelming torrent of data. For large-scale environments, log volumes can easily reach gigabytes or even terabytes per day, making manual analysis virtually impossible.

The key

to unlocking the invaluable insights hidden within this massive,

unstructured data is automation. Specifically, automating the

crucial first step: log parsing.

Log parsing is the process of transforming raw, unstructured log messages (e.g.,

plain text lines) into a structured format (like JSON or key-value pairs),

making the data easily searchable, queryable, and analyzable by machines. This

comprehensive guide delves into the challenges of large-scale log parsing and

provides a detailed roadmap for building a robust, automated log parsing

pipeline.

The Log Parsing Challenge in Large-Scale Systems

Before

diving into solutions, it's essential to understand the unique challenges that

high-volume, enterprise-scale logging presents:

1. Massive and Increasing Volume (Velocity)

Logs are

generated continuously and rapidly. Handling hundreds of millions of log

messages across thousands of servers requires a pipeline capable of high-throughput

ingestion and processing without introducing performance bottlenecks.

2. Inconsistent and Heterogeneous Formats (Variety)

A typical

large environment uses dozens of different technologies, each generating logs

in its own format.

- Operating System Logs: Syslog, Windows Event Logs.

- Application Logs: Custom plain text, Java

stack traces, JSON, XML.

- Infrastructure Logs: Web server logs (NCSA/W3C),

database logs, cloud flow logs.

- Log Format Evolution: As applications are

updated, their log formats often change, breaking static parsing rules.

3. Unstructured or Semi-Structured Data

Most

traditional logs are plain text, which is designed for human readability, not

machine analysis. Extracting key information like timestamps, log levels,

transaction IDs, and variable message fields from this chaotic text

requires sophisticated techniques.

4. Need for Real-Time Processing

For

critical tasks like anomaly detection and security incident response, analysis

must be near real-time. Delays in parsing can lead to delayed detection and

increased Mean Time to Detect (MTTD).

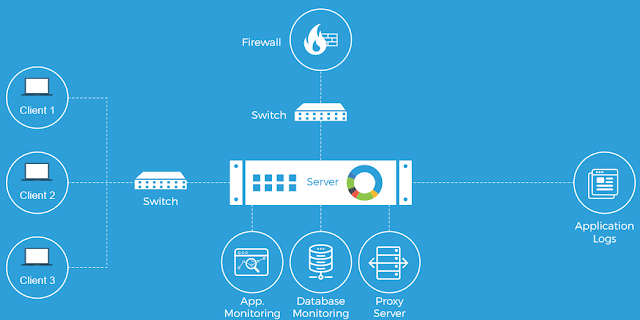

The Log Parsing Automation Workflow

Automating

log parsing requires a multi-stage pipeline designed for scale and resilience.

The standard log analysis pipeline includes: Collection, Parsing &

Normalization, Storage & Indexing, and Analysis & Visualization.

1. Log Collection and Aggregation

The first

step is centralizing logs from diverse sources. This requires lightweight,

high-performance agents.

- Log Shippers (Agents): Tools like Fluent Bit

(extremely lightweight, often used in containers) or Elastic Beats

(Filebeat, Metricbeat) are installed on source hosts to tail log files and

stream the data.

- Aggregation Layer (Broker): For truly massive scale, an

intermediate message queue like Apache Kafka or RabbitMQ is

essential. This decouples log ingestion from log processing, acting as a

buffer against spikes in log volume and ensuring data durability. This is

a critical component for fault tolerance in large-scale systems.

2. Core Log Parsing Techniques

Once logs

are aggregated, the automated parsing engine converts the raw data into a

structured template.

A. Rule-Based Parsing (Regular Expressions/Grok)

For

established, predictable log formats, Regular Expressions (Regex) or the

higher-level Grok patterns are the standard workhorse.

- How it works: A pattern is defined to

match the constant text of a log message and capture the variable data

into named fields.

- Example: A raw log line like [2025-12-09 10:00:00] INFO

User:1234 logged in from 192.168.1.1 can be parsed by a Grok pattern into:

- %{TIMESTAMP_ISO8601:timestamp}

- %{LOGLEVEL:level}

- User:%{INT:user_id}

- logged in from

%{IP:source_ip}

- Limitation at Scale: This approach is brittle.

Any minor change in the log format (e.g., adding a field) breaks the

regex, requiring manual maintenance and updates—a significant overhead in

rapidly evolving environments.

B. Machine Learning/Clustering-Based Log Parsing

For environments

with dynamic, often-changing, or entirely custom log formats, data-driven

methods provide the necessary automation and robustness. These algorithms

automatically discover the underlying structure or "log template"

from the raw text.

- How it works: These methods (e.g., Drain,

IPLoM, LogCluster) treat log messages as a corpus of text and use

techniques like:

- Tokenization: Breaking the log line into

words/tokens.

- Grouping/Clustering: Grouping similar log

messages based on the number of tokens or common constant tokens.

- Template Generation: Identifying the constant

parts (the event template) and the variable parts (the parameters) within

each group.

- Advantages: They require no

pre-configuration or human knowledge of the log format, automatically

adapting to new log events and format changes—critical for large, complex

systems.

- Parallel Parsing (POP): For truly large volumes,

parallel parsers like POP (Parallel Log Parsing) leverage

distributed processing frameworks (like Apache Spark) to achieve

high efficiency by breaking down the log stream and processing it across

multiple nodes simultaneously.

3. Log Normalization and Enrichment

Parsing

alone provides structure, but to enable cross-system analysis, the data must be

normalized and enriched.

A. Normalization

Normalization

involves standardizing field names, formats, and values across heterogeneous

log sources.

- Standardized Schema: Mapping source-specific

fields (e.g., srcAddr, source_ip, client-ip) to a unified, standardized

field name (e.g., network.source.ip). This is often guided by a

unified schema like the Elastic Common Schema (ECS).

- Format Conversion: Converting all timestamps

to a single, standardized format (e.g., ISO 8601 UTC) and standardizing

log levels (e.g., mapping ERR, Error, [E] to ERROR).

B. Enrichment

Enrichment

adds external, contextual data to the parsed log event, making it more

actionable.

- Geolocation: Adding latitude/longitude

and country information based on a parsed IP address.

- Threat Intelligence: Cross-referencing parsed IP

addresses or domain names against known threat feeds to flag malicious

activity.

- User/Asset Context: Adding the full name or

department of a user (from an HR or Identity Management system) or the

asset tag/owner of a server (from a CMDB) based on a parsed UserID or

Hostname.

Scalable Architecture and Tooling 🛠️

Automating

log parsing at scale necessitates robust, high-performance tooling capable of

distributed processing.

The ELK/ECK Stack (Elasticsearch, Logstash, Kibana)

The

Elastic Stack remains the most popular open-source solution for large-scale log

management.

- Logstash: The powerful, flexible

server-side data processing pipeline. It can ingest logs from various

sources, apply complex parsing rules (Grok, JSON filters), perform

normalization, and enrich data before outputting. It is often the primary

engine for custom parsing.

- Elasticsearch: A distributed, real-time

search and analytics engine that stores and indexes the structured log

data, enabling complex, low-latency queries across massive datasets.

- Kibana: The visualization and

exploration layer for analyzing the structured data.

The CNCF Ecosystem (Fluentd/Fluent Bit &

Grafana Loki)

For

modern, cloud-native (Kubernetes/Microservices) environments, a lighter, more distributed

approach is often preferred.

- Fluentd/Fluent Bit: These are the industry

standard for log collection and routing in cloud-native settings. Fluent

Bit is extremely lightweight and resource-efficient, making it ideal

for running as a DaemonSet in Kubernetes. They focus on reliable

collection and forwarding rather than heavy parsing.

- Grafana Loki: An open-source,

cloud-native log aggregation system that uses a novel

"index-less" approach. It stores log data as compressed chunks

in object storage (like S3) and indexes only a minimal set of metadata

(labels) for query efficiency. The parsing step (like Grok) is often

applied at query time or handled by a processing agent (e.g.,

Fluent Bit or a Loki client) before ingestion.

Parallel Processing Frameworks

For true

multi-petabyte analysis and complex log mining operations, dedicated big data

tools are necessary.

- Apache Spark: The leading unified

analytics engine for large-scale data processing. Its ability to process

data in-memory makes it ideal for running sophisticated machine

learning-based log parsing algorithms (like POP) or for performing complex

correlation and anomaly detection across historical log data.

Best Practices for Automation Success ✅

1. Shift-Left: Enforce Structured Logging

The

single most effective strategy for automating parsing is to eliminate the

need for complex parsing. This is achieved by implementing Structured

Logging at the application development level.

- Instead of writing a raw

text log line, the application outputs a structured format, typically JSON.

- Raw Log (Difficult to

parse): 2025-12-09 10:00:00 INFO

User 'admin' failed login from IP 10.0.0.1.

- Structured Log (Easy to

parse): {"timestamp":

"2025-12-09T10:00:00Z", "level": "INFO",

"event": "login_failed", "user":

"admin", "client_ip": "10.0.0.1",

"status_code": 401}

When logs

are already in JSON, the "parsing" step becomes a simple,

high-performance JSON filter, making the log pipeline faster, simpler, and much

more resilient to change.

2. Isolate and Validate Parsing Rules

In your

log processing pipeline (e.g., Logstash or dedicated parsing service), create

separate, isolated parsing pipelines for each distinct log format.

- Filter Early: Use initial filters (e.g.,

based on source file path, application name, or initial pattern match) to

route logs to the correct, dedicated parsing pipeline.

- Continuous Validation: Implement a testing

framework that validates parsing rules against a large, representative

sample of historical logs. This ensures that new deployments or format

changes don't silently break your parsing logic.

3. Focus on Critical Fields

In

high-volume environments, attempting to parse every single detail in every log

message can significantly increase computational load.

- Optimize for Value: Prioritize extracting only

the fields absolutely necessary for core operational monitoring, security,

and alerting (e.g., timestamp, log level, unique transaction ID,

source/destination).

- Delay Complex Parsing: If a complex, multi-line

stack trace or deep textual analysis is only needed occasionally, consider

storing the raw log and applying the intensive parsing logic at query

time (or as a separate, scheduled job) to keep the ingestion pipeline

lean.

4. Leverage Advanced ML/AI for Anomaly Detection

With log

parsing automated and data structured, the next frontier is using Machine

Learning to go beyond basic threshold alerting.

- Log Template-Based Anomaly

Detection:

Instead of monitoring the count of specific log messages, monitor the sequence

of log templates. An unusual sequence of events—even if each event is

individually benign—can indicate an underlying issue.

- Clustering of Anomalies: Use clustering on the

variable parameters within the parsed logs to group rare or anomalous

events, helping engineers pinpoint the cause faster.

Conclusion

Automating

log parsing is not just a desirable feature for large-scale environments; it is

a necessity for operational survival and security. The sheer volume and

variety of modern log data demand a shift away from brittle, manual rule-based

parsing toward a dynamic, layered approach.

Comments

Post a Comment