Top Firewall Log Monitoring Challenges Enterprises Face

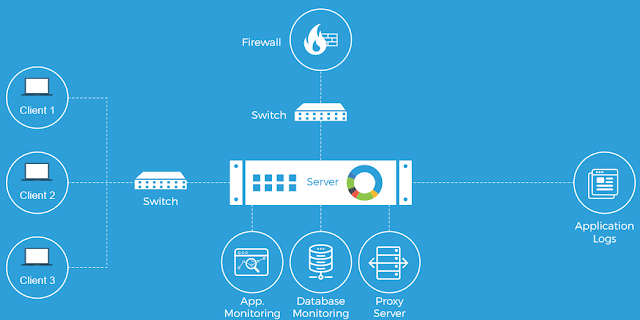

Firewalls

are the digital gatekeepers of the modern enterprise network, acting as the

first line of defense against an ever-evolving threat landscape. Yet, the real

work doesn't stop with deployment. The colossal volume of logs they

generate—recording every connection attempt, rule hit, and policy change—is an

invaluable source of security intelligence.

However,

extracting this value is a Herculean task. Enterprise-level firewall log monitoring

presents a unique set of challenges that can often leave security teams

overwhelmed, leading to blind spots, delayed threat response, and costly

compliance issues. This article dives deep into the top operational and

technical hurdles organizations face in effective firewall log monitoring.

1. The Sheer Volume and Velocity of Log Data

The

single greatest challenge for any enterprise is the Scale of the Data.

In large, high-traffic environments, a firewall can generate billions of log

entries daily. This creates several immediate operational and financial

problems:

- Log Overload and Noise: The vast majority of log

entries are benign "allow" or routine "deny" traffic.

Identifying the handful of critical security events—the

"needles"—within this massive haystack of "noise" is

exceptionally difficult, often exceeding a security analyst's capacity.

- Analyst Fatigue: This constant deluge of

low-value alerts leads to alert fatigue, causing teams to become

desensitized and potentially miss genuine, high-priority threats.

- Storage and Compute Costs: Log volume directly

correlates with soaring costs for storage, indexing, and processing power,

especially when using Security Information and Event Management (SIEM)

systems. As traffic grows, so does the IT budget strain.

- Performance Bottlenecks: Inefficient ingestion and

processing of logs can lead to performance issues and latency, slowing

down the very analytics systems intended to secure the network.

2. Lack of Standardization and Data Inconsistency

Enterprises

rarely run a single firewall vendor. Hybrid environments often involve an

intricate mix of vendors (Cisco, Palo Alto Networks, Check Point, Fortinet), as

well as cloud-native firewalls (AWS Security Groups, Azure Firewall). This

heterogeneity introduces a significant data challenge:

- Parsing and Normalization: Each firewall vendor

formats its logs differently. A log field for "Source IP

Address" in one system might be called something entirely different

in another. Before effective correlation can happen, all logs must be

collected, parsed, and normalized into a common, searchable format.

This process is complex, time-consuming, and prone to error.

- API and Protocol

Differences: Log

collection mechanisms—whether Syslog, proprietary APIs, or cloud-specific

connectors—vary widely, requiring security teams to maintain numerous

custom integrations.

- Missing Context: Logs from the firewall

alone often lack the necessary context (e.g., the specific user involved,

the business application, or the asset's vulnerability score) to determine

if an event is a true threat or a false positive.

3. Misconfigurations and Rule Bloat

The

firewall's operational state is intrinsically linked to the quality of its

logs. Errors in configuration often manifest as monitoring challenges:

- Insufficient Logging: A common and dangerous

challenge is simply not logging the right events. Some

organizations disable logging for high-volume, low-priority rules to save

space, but this can create a severe blind spot if a malicious actor begins

exploiting that traffic. Conversely, overly verbose logging for routine

traffic increases noise.

- Rule Bloat and Redundancy: Over years of changes,

firewall rulesets become riddled with obsolete, redundant, or

"shadowed" rules (rules that are never hit because an earlier,

broader rule catches the traffic first).

- Security Risk: These zombie rules create

unnecessary attack surfaces and make the ruleset—and thus the logs it

generates—far harder to interpret and audit.

- Tracking Rule Changes: A single, incorrect rule

change—a common cause of breaches—can open up a vulnerability. Without a

robust log monitoring system that diligently tracks and alerts on administrative

changes to the rule base, proving non-repudiation and tracing the

cause of a breach becomes nearly impossible.

4. Limited Deep-Packet Visibility (Encrypted

Traffic)

The rise

of end-to-end encryption (TLS/SSL) has dramatically complicated firewall log

analysis:

- The Encryption Blind Spot: When traffic is encrypted,

the firewall can see the source, destination, and port, but it cannot

inspect the payload for malicious content like malware or data

exfiltration attempts. This creates a critical blind spot in the logs.

- Decryption Overhead: While Next-Generation

Firewalls (NGFWs) offer SSL/TLS decryption capabilities, implementing them

for deep inspection is resource-intensive. It can lead to significant

network slowdowns and performance issues, making many enterprises hesitant

to enable it across all traffic. The logs, therefore, only show a secure

connection, not a secure payload.

5. Compliance and Long-Term Retention

Regulatory

compliance mandates (like PCI DSS, HIPAA, GDPR, etc.) place strict requirements

on log retention and review, adding a layer of logistical and security complexity:

- Auditing and Forensics: Logs must be retained for

specific periods (often 90 days to several years) to support regulatory

audits and forensic investigations following a breach. Storing this

massive volume of data securely and making it quickly accessible for an

investigation is a logistical nightmare.

- Log Tampering: For logs to be viable

evidence in a forensic investigation, they must be demonstrably immutable

and protected from tampering by an attacker who gains access to the

network. This requires robust, secured, off-device log storage, often

achieved via a centralized, write-once, read-many (WORM) repository or

SIEM.

Overcoming the Log Monitoring Hurdles: Best

Practices

To turn a

flood of firewall data into actionable intelligence, enterprises must shift

from passive storage to proactive, automated analysis.

|

Challenge |

Best Practice Solution |

Technology Enabler |

|

Log Overload & Noise |

Filtering and Optimization: Fine-tune firewall logging settings to capture only critical security and denied connection events. Artificial

Ignorance: Use machine learning to automatically suppress or ignore

routine, high-volume, low-value logs. |

SIEM, Log Analytics Platforms,

AI/ML-driven Security Tools |

|

Data Inconsistency |

Centralized Log Management: Aggregate all logs (firewall,

endpoint, server) into a single, centralized platform for automatic parsing

and normalization. |

SIEM, Log Management Systems,

Data Lake |

|

Misconfigurations & Bloat |

Automated Policy Audit: Schedule quarterly, automated reviews to identify and remove redundant, shadowed, or overly permissive rules. Change Tracking: Log and alert on all firewall

rule/configuration changes in real-time. |

Firewall Analyzer Tools,

Configuration Management Solutions |

|

Limited Visibility |

Selective SSL Decryption: Strategically decrypt and

inspect high-risk traffic categories while excluding sensitive data (e.g.,

healthcare, financial) to balance security and performance. |

Next-Generation Firewall

(NGFW), Decryption Proxies |

|

Compliance & Retention |

Secured, Long-Term Storage: Implement log retention policies that meet regulatory mandates, storing logs in an off-device, tamper-proof repository. Correlation: Use log correlation to link

disparate events into a single, cohesive security incident. |

Cloud Storage (WORM), Dedicated

Log Archiving Solutions |

By adopting these practices and leveraging modern security automation and analytics platforms, enterprises can transform their firewall logs from an overwhelming liability into one of their most powerful defensive assets. The true value of a firewall is not just in what it blocks, but in the intelligence it provides about the threats that are actively trying to breach the perimeter.

Comments

Post a Comment